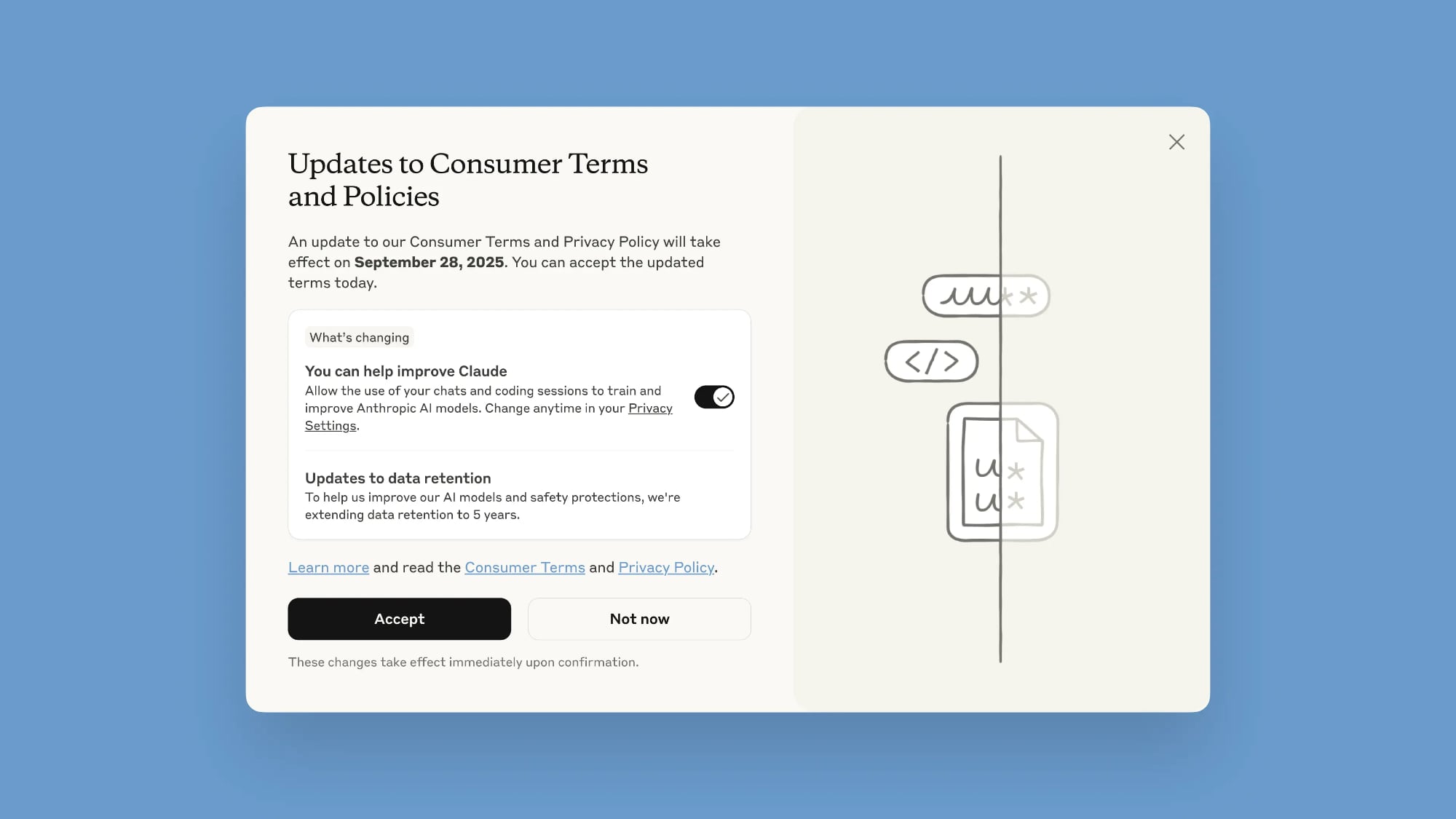

New users volition beryllium capable to opt retired astatine signup. Existing users volition person a popup that allows them to opt retired of Anthropic utilizing their information for AI grooming purposes.

The popup is labeled "Updates to Consumer Terms and Policies," and erstwhile it shows up, unchecking the "You tin assistance amended Claude" toggle volition disallow the usage of chats. Choosing to judge the argumentation present volition let each caller oregon resumed chats to beryllium utilized by Anthropic. Users volition request to opt successful oregon opt retired by September 28, 2025, to proceed utilizing Claude.

Opting retired tin besides beryllium done by going to Claude's Settings, selecting the Privacy option, and toggling disconnected "Help amended Claude."

Anthropic says that the caller grooming argumentation volition let it to present "even much capable, utile AI models" and fortify safeguards against harmful usage similar scams and abuse. The updated presumption use to each users connected Claude Free, Pro, and Max plans, but not to services nether commercialized presumption similar Claude for Work oregon Claude for Education.

In summation to utilizing chat transcripts to bid Claude, Anthropic is extending information retention to 5 years. So if you opt successful to allowing Claude to beryllium trained with your data, Anthropic volition support your accusation for a 5 twelvemonth period. Deleted conversations volition not beryllium utilized for aboriginal exemplary training, and for those that bash not opt successful to sharing information for training, Anthropic volition proceed keeping accusation for 30 days arsenic it does now.

Anthropic says that a "combination of tools and automated processes" volition beryllium utilized to filter delicate data, with nary accusation provided to third-parties.

Prior to today, Anthropic did not usage conversations and information from users to bid oregon amended Claude, unless users submitted feedback.

Tag: Anthropic

This article, "Anthropic Will Now Train Claude connected Your Chats, Here's How to Opt Out" archetypal appeared connected MacRumors.com

Discuss this article successful our forums

(2).png)

5 months ago

19

5 months ago

19

English (US) ·

English (US) ·